A “White Box” Model: Improving Transparency and User Confidence Through Human-AI Interaction Techniques

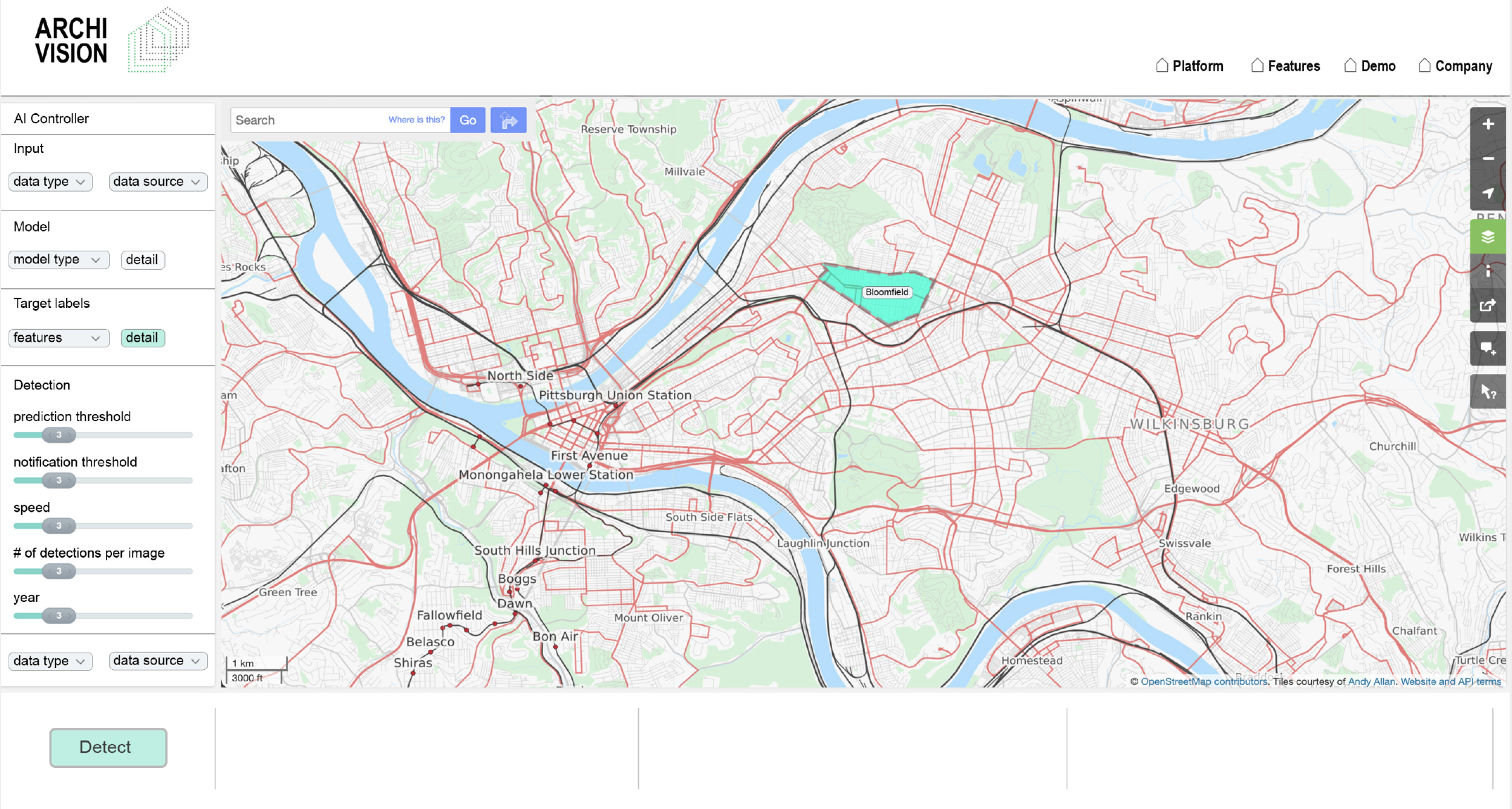

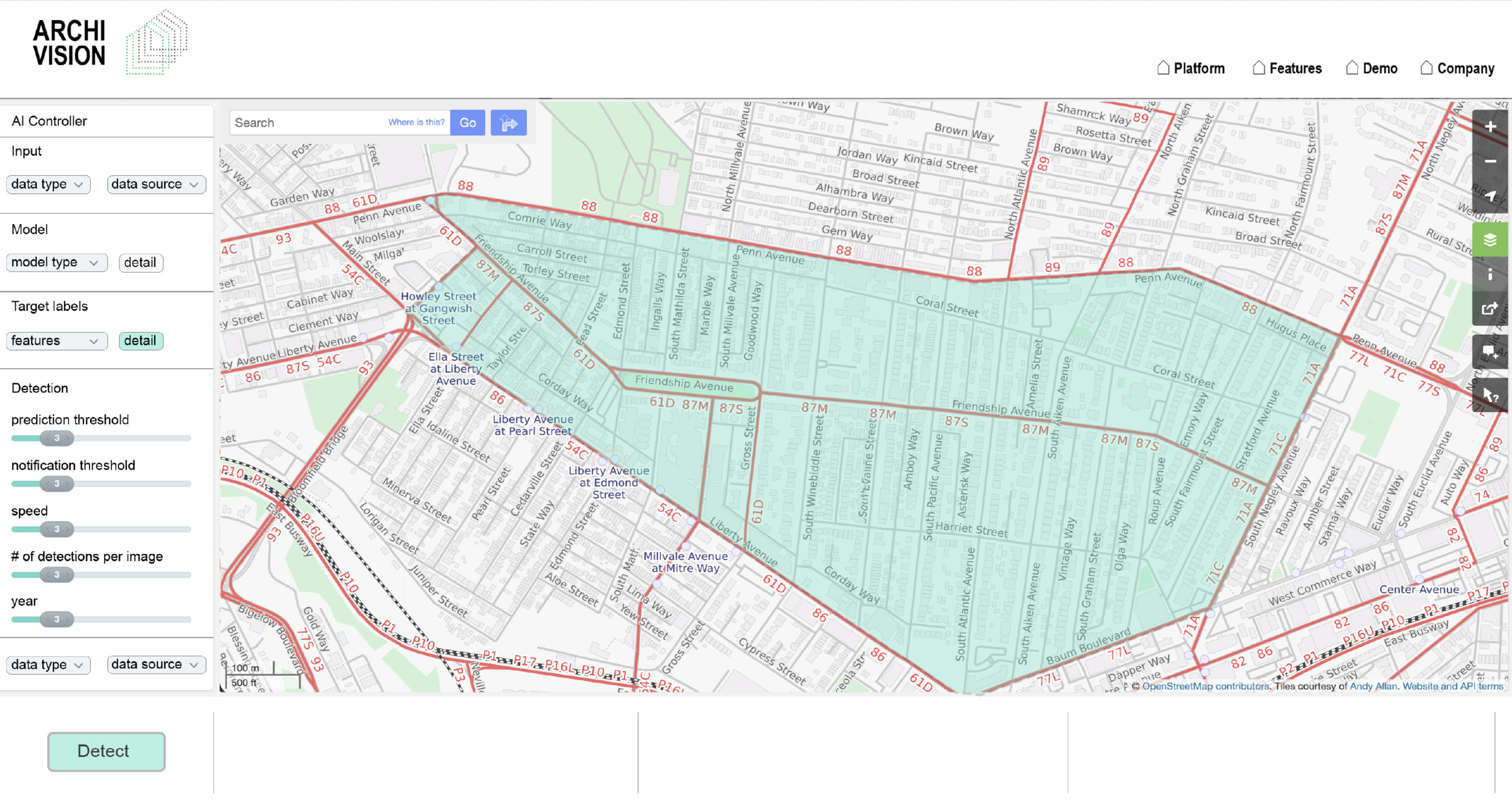

The proposed Archi Vision object detection model incorporates a number of features that provides transparency to model operation and output. As mentioned previously, users have direct control over various model parameters, allowing them both coarse and fine control over model performance, architecture and operation. More importantly, Archi Vision incorporates a unique human-AI feedback loop that allows users to review and improve model performance over time based on their own input and corrections to the model.

By presenting the user with potential object misclassifications and detections, mistakes can be identified by the user and fed back into the training loop in order to correct and improve the model over time. Users can also set a threshold that determines which misclassified images are presented to them for review based on prediction confidence rates. For example, a skeptical user may specify that any detection below a 90% confidence level must be presented to them for review. A less skeptical user may want to set the threshold at 60%, so any object detection and classification below 60% needs to be reviewed, but anything above does not.